Data Engineer Zoomcamp Week 2: Workflow Orchestration with Mage.AI

Welcome back to the Data Engineering Zoomcamp! In Week 2, we're diving into workflow orchestration using the Mage.AI platform.

To be able to start with this week's learning you need to clone this repository and follow the steps explained in the README file. This repository contains a Docker Compose template for getting started with a new Mage project and it requires Docker to be installed locally. If you don’t have Docker, you can follow the instructions here. But first, let's gain a deeper understanding of orchestration and the Mage platform.

Intro to Orchestration

What is orchestration? Orchestration refers to the coordination and management of various tasks, processes, and data workflows to achieve a specific outcome or goal. It is the process of organising and controlling the flow of data and operations within a system or a series of connected systems.

In simpler terms, imagine you have multiple data processing tasks that need to be executed in a specific order, with some tasks depending on the completion of others. Orchestration helps you define, schedule, and manage the execution of these tasks in a coherent and efficient manner.

For example, in a data pipeline, you might have tasks like extracting data from a source, transforming it, and loading it into a destination. Orchestration ensures that these tasks are executed in the correct sequence, handles dependencies between tasks, and manages the overall flow of data through the pipeline.

In week 2 we have been learning how to efficiently organize and control the execution of data processing tasks using the Mage.AI platform. This includes understanding the basics of orchestration, the significance of coordinating tasks, and how Mage facilitates this process for seamless data integration and transformation.

Intro to Mage

Mage is an open-source, hybrid framework designed for data transformation and integration. As a tool in the field of data engineering, Mage is user-friendly for building and managing data pipelines.

Mage accommodates both local and cloud-based data processing, providing data engineers with the flexibility to seamlessly work across diverse environments. Its core functionalities revolve around data transformation and integration, forming the backbone of efficient pipelines.

Mage excels in orchestrating the flow of data, ensuring logical sequence execution, and managing complex data workflows effectively. The platform's integration with Docker further enhances its usability, simplifying dependency management and deployment.

Videos related to Mage and the DE camp can be found here.

Ready to accelerate your data engineering career? Check out this comprehensive guide "Python Data Engineering Resources" - packed with 100+ carefully selected tools, 30+ hands-on projects, and access to 75+ free datasets and 55+ APIs.

Get a free sample chapter at futureproofskillshub.com/python-data-engineering. Available in paperback on Amazon, and as an ebook on Apple Books, Barnes & Noble, and Kobo.

Master the complete tech stack atfutureproofskillshub.com/books – from AI to Python, SQL, and Linux fundamentals. Plus, discover how to maintain peak performance and work-life balance while advancing your technical career in "Discover The Unstoppable You".

ETL: Extract data from API to GCS

Now that we have cloned the repository, started the Docker container, and navigated to http://localhost:6789 in the browser, let's dive into the practicalities. Craft an ETL pipeline loading data from an API to GCS. Partitioned or unpartitioned, it's your call.

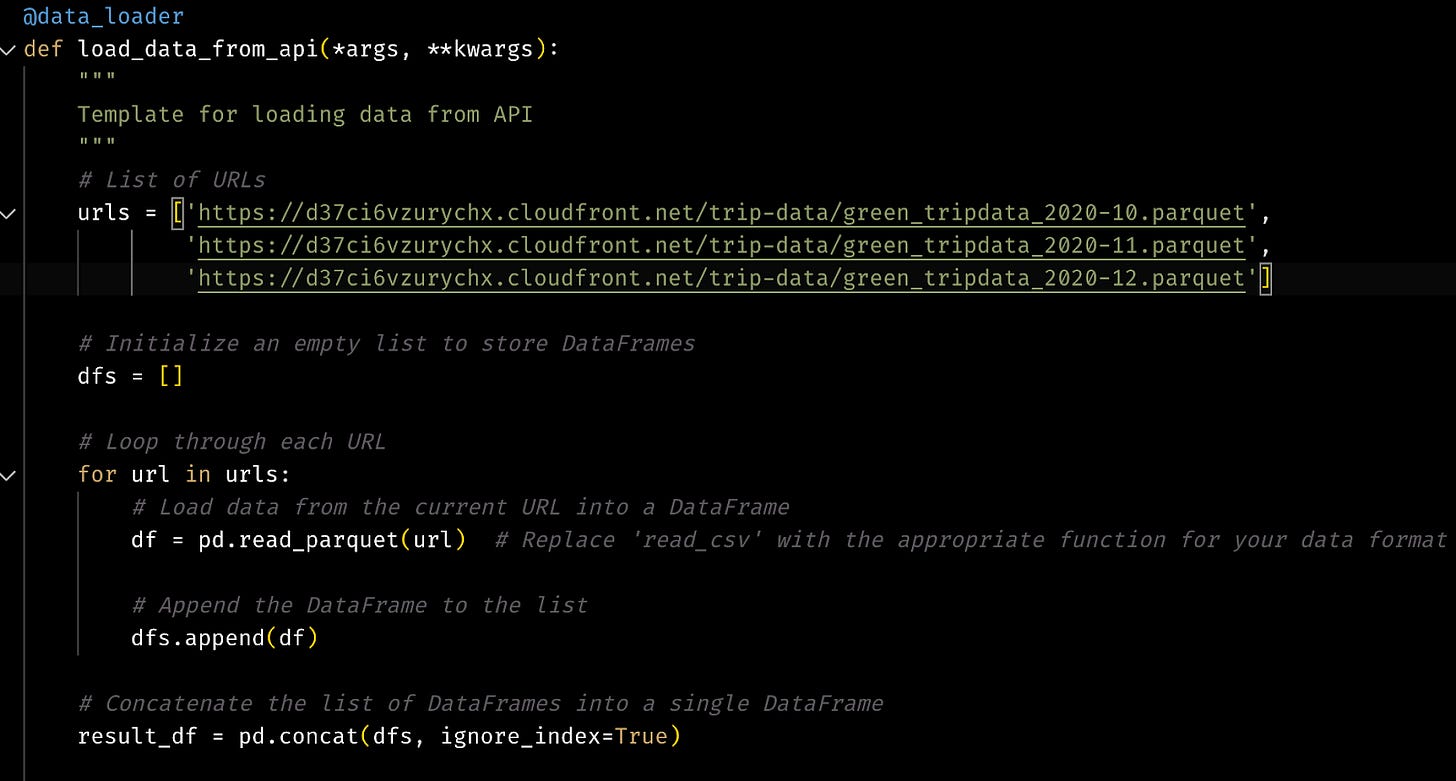

To expedite the process, I worked with datasets we had for homework. This is just a snippet of the @data_loader block in the Mage, where we had to read data for the final quarter of 2020 (months 10, 11, 12) from the green taxi dataset.

ETL: Transforming data

Transforming data is a crucial step in the data processing pipeline that enhances the quality, consistency, and usability of data. Its importance lies in several key aspects such as normalisation and standardisation, data cleaning, aggregation, and summarisation, converting data types, handling missing or outlier values etc. This contributes to the effectiveness and value of data for decision-making and analysis.

ETL: GCS to BigQuery and Parameterised Execution

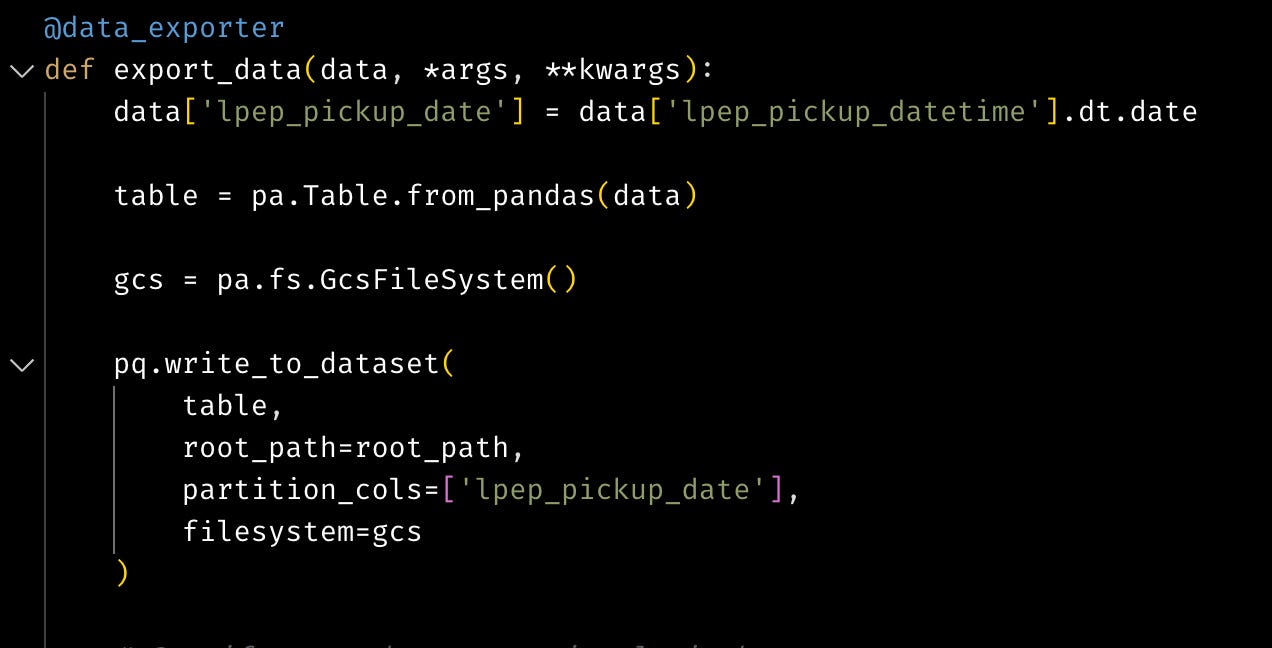

Witness Mage seamlessly transporting data from GCS (Google Cloud Storage) to BigQuery. It is just the standard data engineering workflow in action. We played with built-in runtime variables and how to use these variables to parameterise our pipelines.

Deployment

It is time to deploy Mage using Terraform and Google Cloud. For this case, I was using my Terraform file from week 1 that creates a bucket and Big Query. This allows us to create resources in the cloud automatically without clicking in the cloud. Run the ETL pipeline and now we have created a fully deployed project.

Conclusion

As you navigate this data engineering adventure, don't forget the repository – your engineer's toolbox. Slides, datasets, and resources are neatly organised. The journey doesn't end here. Next week is all about Data warehouse and Big Query.

So, Data Engineers keep coding and keep exploring.